News

Leading tech gurus and scientists oppose of future ‘terminators’

Thanks to science fiction and pop culture, most of us are often starry-eyed and awestruck when we imagine the future with towering glitzy buildings, flying cars, automated machinery and robots serving us in industrial, domestic and military settings. However, the reality may be quite different than what it seems to be. Artificial intelligence is not something to be reckoned with as the possibility of a machine developing a conscious can always pose a threat to humankind. No matter how far-fetched it may sound, the advancements in improving artificial intelligence may soon reach such a point when a system starts thinking on its own.

While having a domestic automated help is a different thing, nations around the world are focusing more on developing artificial intelligence for military purposes to reduce the loss of human life and property. Since the Iraq and Afghanistan wars, the US has used automated or remotely controlled drones extensively. More so, the newer models have pre-designated objectives, decreasing the requirement of a fly by wire method. Today, military organisations of major countries are now focusing on intelligent nanotechnology as well as miniature drones capable of surveillance as well as taking out targets discreetly. DARPA is a leading name in these developments since it has also created prototypes of war machines equipped with lethal weapons. Leaving all this aside, a question arises – is the world ready for this?

To make the threat of a backfire imminent among people and industries , leading tech gurus and scientists have released an open letter that warns the public about the danger of weaponized artificial intelligence. Stephen Hawking, Elon Musk and Steve Wozniak are some of the important names in the document.

The luminaries believe that for the last 20 years we have been dangerously preoccupied with making strides in the direction of autonomous AI that has decision-making powers.

The letter states – “Artificial Intelligence (AI) technology has reached a point where the deployment of [autonomous] systems is — practically if not legally — feasible within years, not decades, and the stakes are high: autonomous weapons have been described as the third revolution in warfare, after gunpowder and nuclear arms.”

Last year, South Korea revealed armed sentry robots, that are currently installed along the border with North Korea. Their cameras and heat sensors allow them to detect and track humans automatically, but the machines need a human operator to fire their weapons.

The researchers have also acknowledged that sending robots into battles may reduce the possibility of war casualties but also increases the chance of a global AI arms race with autonomous weapons becoming “Kalashnikovs of tomorrow”. The fact that the cost of such machines are much lower than nuclear weapons could boost the possibility of terrorist organisations as well as military powers to promote mass production. This can eventually tap the weapons black market where such devices or machines can be used for assassinations, destabilizing nations, subduing populations and selectively taking out targets of a particular ethnic group.

Moreover, the fact that any negative usage of this may lead to mass hysteria as well as a public outrage against such military practices. None of this comes as a surprise to anyone who follows their careers or keeps up to date with AI news. Wozniak, who co-founded Apple with Steve Jobs, is known to be very vocal on the issue. In June, he posited that a smart AI would wish to control nature itself – and therefore, humans as “pets.”

“They’ll be so smart by then that they’ll know they have to keep nature, and humans are part of nature. So I got over my fear that we’d be replaced by computers. They’re going to help us. We’re at least the gods originally,” he told an Austin audience at the Freescale Technology Forum 2015.

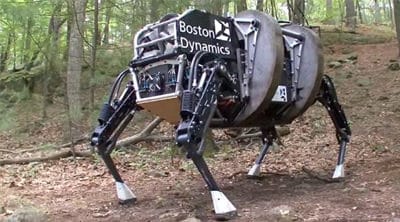

Tesla leader Elon Musk also has spoken about the issue by targeting Google’s rapid escalation towards advanced robotics. The company recently purchased Boston Dynamics that scared everybody with its four-legged animal-like robots.

Musk said “The risk of something seriously dangerous happening is in the five-year timeframe. Ten years at most,” Musk wrote in a leaked private comment to an internet publication about the dangers of AI, a few months ago. “Please note that I am normally super pro-technology and have never raised this issue until recent months. This is not a case of crying wolf about something I don’t understand.”

Both Wozniak and Musk have been trying to shift the tide of robotics by promoting it towards the benefits of mankind instead of using it for deadly purposes.

Famed scientist Stephen Hawking, who himself uses artificial intelligence for speech as well as locomotion has said that if technology matches human intellect and capabilities, it would take off on its own and re-design itself without human assistance at an ever increasing rate.

Countless experts are already comparing Google’s dominance over the cyberspace to the Terminator series’ Skynet system.

We come back to the question raised earlier – are we ready for this? Having robots in the arsenal of a country definitely sounds advanced but how long will it be till something goes wrong? The very same science fiction has taught us that artificial intelligence can turn against us with harmful consequences.

Are we really ready for this?