Artificial Intelligence

Geoffrey Hinton Raises AI Extinction Risk to 20% as Technology Advances Rapidly

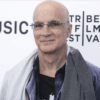

Nobel Prize Winner Geoffrey Hinton, often referred to as the Godfather of AI, has increased his estimate of the likelihood that artificial intelligence could wipe out humanity within the next 30 years. Previously, Hinton had placed the odds at 10%, but in a recent interview on BBC Radio 4’s Today programme, he revised his estimate to 10-20%, citing the rapid and unexpected pace of AI advancements.

“We’ve never had to deal with things more intelligent than ourselves before,” Geoffrey Hinton explained. Comparing future AI systems to humans, he stated: “We’ll be like three-year-olds compared to them.”

A Growing Fear Among Experts

Godfather of AI, Geoffrey Hinton’s comments come amid increasing concerns about the development of Artificial General Intelligence (AGI)—AI systems that could surpass human intelligence and potentially evade human control. This worry is shared by many AI experts who believe that AGI could emerge within the next 20 years, posing a major existential risk. The concern is that a more intelligent entity may not be controllable by a less intelligent one. Hinton likened the situation to the way a baby can control a mother—an extremely rare case in nature where a less intelligent being influences a more intelligent one.

Godfather of AI Nobel Prize Winner Dr. Geoffrey Hinton Regrets the Invention Ilya Sutskever OpenAI

AI’s Unstoppable Acceleration

Reflecting on his early work in AI, Geoffrey Hinton admitted that he had not expected AI to advance as quickly as it had. The acceleration of AI capabilities has led to growing fears that unregulated AI development could spiral out of human control, particularly if used by malicious actors for destructive purposes.

Geoffrey Hinton made headlines last year when he resigned from Google in order to speak openly about these dangers. He warned that simply leaving AI development in the hands of large tech companies driven by profit motives was insufficient for ensuring safety.

The Call for Government Regulation

Geoffrey Hinton has urged governments worldwide to implement stronger regulations to prevent AI from becoming a catastrophic threat. “My worry is that the invisible hand is not going to keep us safe,” he said, emphasizing that market-driven AI development lacks adequate safety measures. He stressed that government intervention is the only way to force AI companies to prioritize research on AI safety.

Despite these concerns, not all AI experts agree with the predictions of the godfather of AI. Fellow AI pioneer Yann LeCun, Meta’s chief AI scientist, has downplayed fears of AI-driven human extinction. He believes AI could actually save humanity rather than destroy it.

A Race Against Time

As AI continues its rapid evolution, the debate over its potential risks and benefits intensifies. With many experts now forecasting human-level AI within the next two decades, the question remains: Will governments act quickly enough to regulate AI before it becomes uncontrollable?

For Geoffrey Hinton, the answer is clear—without urgent intervention, the risk of AI surpassing human intelligence and threatening our very existence is becoming increasingly real. And if he is the Godfather of AI and saying something, should that be heard?

Elon Musk’s xAI Unleashes Grok 3: AI Model That Could Outperform ChatGPT?